Meet Your New Co-Pilot: AI Workflows Are Coming to Topcoder

At Topcoder, we know the hardest part of competing isn’t just solving the problem—it’s the silence that follows. For years, you’ve submitted code, crossed your fingers, and waited days to find out whether you passed or missed a requirement buried deep in the spec. That changes now.

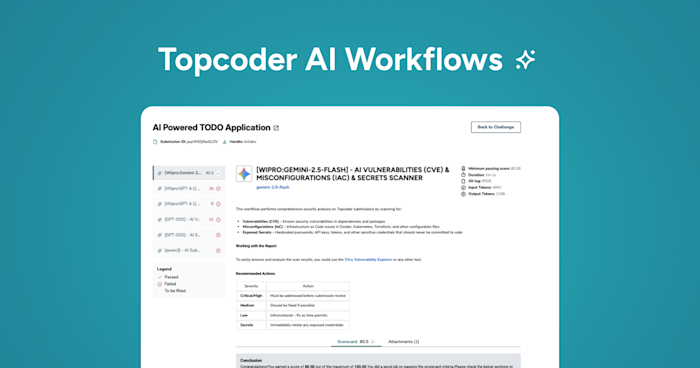

Today, we are thrilled to announce a massive upgrade to the Topcoder experience: AI Workflows. Real-time, AI-powered reviews that turn every submission into a coaching session, helping you improve faster, ship more secure code, and win more often.

Quick Summary

AI Workflows replace “submit and wait” with “submit and know,” giving you detailed, standardized feedback in minutes instead of days.

They create a Thriving Loop: the AI flags issues, explains them, and lets you fix and resubmit before the phase ends—turning rejections into learning moments.

The system focuses on code quality and logic, not language fluency, making challenges fairer and more inclusive for our global community.

For customers, AI Workflows act as a Secure Sandbox, ensuring that only rigorously vetted solutions reach them—bringing more challenges, more prize money, and better opportunities to you.

Human in the Loop: AI reviews never have final authority; challenge reviewers remain the decision-makers and use AI feedback as an input, not a replacement, for their judgment.

You can explore AI-enabled challenges in the updated Review UI, see per-workflow scorecards and models, and give direct feedback via likes/dislikes and comments to help shape how this new era of AI-assisted competition evolves.

The Old Way vs. The New Way

We wanted to close the "Feedback Gap"—that long silence between your submission and the review results. Here is how the game is changing:

The Old Way | The AI Workflow Way |

Submit & Wait: You wait 24-72 hours for a human review. | Submit & Know: You get detailed feedback in minutes. |

One Shot: A mistake often meant disqualification or a scramble at the end. | The Thriving Loop: You get a "Learning Moment." The AI spots the error, explains it, and lets you fix and resubmit before the phase ends. |

Subjective: Depends on which reviewer picks up your ticket. | Standardized: Every submission is judged against the exact same rubric, every time. |

Why This Matters For You (The Talent)

This isn't just about speed; it's about Mastery.

Imagine submitting a solution and instantly hearing, "Hey, your logic is solid, but you missed a SQL injection vulnerability in login.js."

In the past, that might have been a rejection. Now, it’s a chance to learn. You fix the bug, secure the query, and resubmit. You haven't just saved your submission; you've become a better developer in the process.

This system also bridges the language gap. The AI focuses purely on your code logic, not on how well you parsed a complex English sentence in the spec. It points directly to the line of code that needs attention, making the platform fairer and more inclusive for our global community.

Why This Matters for Customers (And Why That helps You)

For our enterprise clients, this system creates a Secure Sandbox. They know that every solution reaching them has already passed a rigorous, automated gauntlet.

Why does this help you? Because happy customers bring more challenges. By proving that Topcoder can deliver high-quality, pre-vetted code faster than anyone else, we unlock more work, more prize money, and more interesting problems for you to solve.

What’s Next? We Need You!

We are rolling this out now on select challenges. You’ll see the AI Vetting icon on your active challenges page in the updated Review UI.

A detailed overview of all AI workflows related to your submission becomes available within minutes after submission and can be reviewed on the challenge details page in the updated Review UI.

Each AI workflow in the challenge uses its own scorecard rubric with problem-specific groups, sections, and questions. The updated Review UI shows key details such as score, LLM model used, duration, and more, so you can inspect each AI workflow and provide direct feedback. You can use likes/dislikes and threaded comments to share valuable feedback that helps us improve the system.

Challenge reviewers see your AI workflow results, including scores, explanations, and flagged issues, and they use that information as one more lens when evaluating your submission. But AI reviews have no direct deciding authority: they cannot automatically reject or accept your solution.

Final decisions about your submission—pass, fail, or anything in between—are always made by human reviewers who understand the challenge context, edge cases, and nuance. The AI’s role is to surface potential problems faster, give you richer feedback, and support reviewers with structured insights. Your work is still judged by experts; the AI just helps everyone get there more quickly and fairly.

Our ask to you:

Jump in: Participate in an AI-enabled challenge.

Test the limits: See how the AI responds.

Give Feedback: Did the AI catch something cool? Did it miss context? Tell us directly in the Scorecard UI by simply comment the AI feedback!

This is the beginning of a new era where the platform works with you to help you succeed. We can't wait to see how you build with your new co-pilot.