December 30, 2019

Introduction to Large Scale Knowledge Graphs

Introduction

In this post we cover the theoretical background of the concept of knowledge graph (KG). We provide an illustrative example of how they are constructed. Then, we discuss some of the most well known KG projects. Following this is a brief discussion on how KGs can be used for the question answering (QA) task. Finally, we provide a conclusion and further reading on this and related topics.

Definition

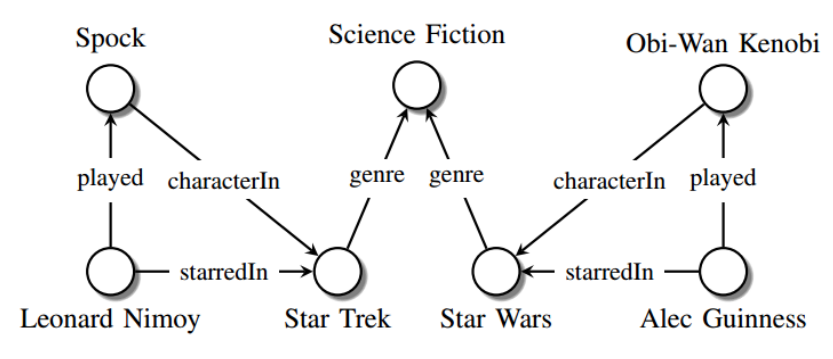

In some cases the concepts of knowledge graph (KG) and knowledge base (KB) can be used interchangeably, that is, a KG can be used to refer to a KB. However, from a more strict point of view a KG is a graph structured KB. The purpose of a KG is to model information in the form of triples or facts. Each triple is composed of a subject, predicate and an object (also referred to as SPO), where the subject and object are called entities and the predicate represents the relationship between them. Then, we can use these triples to build a directed graph, where each node will represent a subject or object entity and directed labeled edges will represent their relationship. An illustrative example of a KG can be found in the following figure:

Figure extracted from [9].

Existing projects

Over the past few years a number of KGs have been introduced, such as DBpedia, Freebase, YAGO, among others. Each has its own essence.

DBPedia

The structured data of this project is extracted from Wikipedia. It is possible to retrieve information with SQL-like queries using SPARQL language. It is intended to be freely available (CC-BY-SA licenses).

Freebase

This project is created, structured and maintained by users. It uses the Metaweb Query Language (MQL) to query and manipulate data.

YAGO

In the first versions the data was automatically extracted from Wikipedia and WordNet. In recent versions YAGO also extracts information from GeoNames and adds a multilingual dimension of knowledge by extracting data from Wikipedia in ten different languages. In addition, facts include spatial and temporal information.

Application on Open-domain Question Answering

The term open-domain QA refers to questions from a broad range of different domains. The most popular type of QA system is the so-called IBM Watson, made famous for beating champion players at the television game show Jeopardy!. This system used YAGO, DBPedia and Freebase.Using a KG to answer a question can be most simply described as finding a triple in the KG (subject, relation/predicate, object) where the subject and relation match the given question and the answer is given by the object entity. In the recent past this task was implemented by building a template query (e.g. SPARQL) and executing it against the KG to retrieve the answers. Several other more sophisticated approaches were introduced recently, for instance Bordes et al. [6] used the Freebase KB as a basis for a QA system to answer simple questions. In addition, the authors showed that memory networks can be trained effectively to work in these types of tasks.

Conclusion

In this post we presented a brief introduction of knowledge graphs. The topic by itself is very broad and covers other research areas and applications apart from question answering. For instance, applications such as digital assistants and help desks, and research on dialogue systems and relational machine learning for knowledge graphs, among others. Other types of existing KGs are included as a reference for further reading, such as Google’s Knowledge Graph, NELL or Microsoft’s KG called Satori.

References

[1] Ferrucci, David, et al. “Building Watson: An overview of the DeepQA project.” AI magazine 31.3 (2010): 59-79.

[2] Auer, Sören, et al. “Dbpedia: A nucleus for a web of open data.” The semantic web. Springer, Berlin, Heidelberg, 2007. 722-735.

[3] Bollacker, Kurt, et al. “Freebase: a collaboratively created graph database for structuring human knowledge.” Proceedings of the 2008 ACM SIGMOD international conference on Management of data. AcM, 2008.

[4] Suchanek, Fabian M., Gjergji Kasneci, and Gerhard Weikum. “Yago: a core of semantic knowledge.” Proceedings of the 16th international conference on World Wide Web. ACM, 2007.

[5] Rebele, Thomas, et al. “YAGO: A multilingual knowledge base from wikipedia, wordnet, and geonames.” International Semantic Web Conference. Springer, Cham, 2016.

[6] Bordes, Antoine, et al. “Large-scale simple question answering with memory networks.” arXiv preprint arXiv:1506.02075 (2015).

[7] Sukhbaatar, Sainbayar, Jason Weston, and Rob Fergus. “End-to-end memory networks.” Advances in neural information processing systems. 2015.

[8] https://www.blog.google/products/search/introducing-knowledge-graph-things-not/

[9] Nickel, Maximilian, et al. “A review of relational machine learning for knowledge graphs.” Proceedings of the IEEE 104.1 (2015): 11-33.

[10] Carlson, Andrew, et al. “Toward an architecture for never-ending language learning.” Twenty-Fourth AAAI Conference on Artificial Intelligence. 2010.

[11] http://blogs.bing.com/search/2013/03/21/understand-your-world-with-bing/